Been a while since I was last here... ;D

It's also been a few months since I last took a vacation and went somewhere new for fun, so I decided to write some notes about it. Perhaps not the kind of thing you would post online, but eh, might as well share a bit of the fun (and the pain) with whoever that's following this blog (hehe)...

For context, I've been eyeing one of the more northern European country for a Christmas trip since I was here, but a plan has never materialized: I was in Korea, then in Japan, both times with the crew I had from Canada. This time around, the crew was not assembling, so I was back to eyeing the Scandanavians. Luckily, one of my other friends just started their Master's at the same place as mine, and they are also up for a trip. Let's go... to Copenhagen I thought. It's not too far, probably we can reach by train, I thought.

... and that's what we planned. Now, it was not initially this way: we thought of taking a cheap flight to Copenhagen before Christmas, then slowly taking our time through Germany with a few ICE trips afterwards. Looking at the air ticket prices though:

– 97CHF for one way, no luggage (not even carry-on) tickets from Geneva to Copenhagen (~1.xx hours)

– ~110CHF for the same trip but by trains and we stop at Hamburg as a middle point (~16 hours total)

At this point I think most people can see some reasons to take the trains, but would still think flight is the saner choice. Not me! I'm a bit of a train enthusiast, and I do enjoy traveling with my small luggage, so train it went. Our plan seems nice:

- train to Hamburg (~11 hours)

- train to Copenhagen (~6 hours)

- train to Berlin (~8 hours)

- train to home (~11 hours)

Fitting some dates in between every trip, and we got ourselves a multi-city vacation. Sweet! We booked all the train trips (~220CHF total, not bad!) as well as all the accomodations a week before. My friend was a bit of an Airbnb hater, so we went for some of the cheaper hotels, and to be frank, the price was about the same.

Eagerly waiting for the departure date...

Day 1: Depart for Hamburg!

We got on the first train at around 2pm, it was a Swiss (SBB) train. As expected, the train was on time to the minute. Despite not being able to book seats in advance on these regular cross-country trains (unless you are getting 1st class, which we students are never touching), there were enough vacancy for us to enjoy the ride all the way up until we arrived at Basel.

From then on, we changed to one of the German (DB) trains. Nothing too peculiar: I've been on DB ICE (high speed railway) before, and the trains they use are pretty similar. Our pre-booked seats seemed full, but that's alright, though it's a bit strange that it did not show the station we're getting off at (later on we found out from the announcements that they had to switch the actual train used on the route at the last minute and did not manage to move all the seat data over). Unlike the previous ride, this time we're sitting on the train for 4 hours straight, so we got comfortable and I dozed asleep for a little bit.

I prepared a lot of snacks for the trip: some grapes, a pack of Zweifel, a pack of chocolate waffles, apples, bananas, coffee and apfelschorle. It was a bit overkill, but who knows what your stomach will act during a 11 hour train trip? In the end, we managed to munch over all the fruits and drinks, leaving half of the waffles and never opened the Zweifel. During this first DB train, we were sitting with a German father and his kindergarten-aged daughter. Some thoughts of sharing snacks crossed my mind, but as soon as we saw them eating pre-cut fruits and vegetables together, we kept ourselves away from unhealthy snacks as well and just shared some grapes between us and the daughter. During the trip, she was also trying to talk to us, but her German is too much for my untrained ears, and so we could only smile awkwardly. Unfortunate!

Fulda

Now, no Deutsche Bahn trip would be complete without a bit of spätung. It was no simple crash either: the delay built up and up and up over time, adding a few minutes each station, before reaching a crescendo in Frankfurt. There, the delays seemed to have collapsed the train with another on the same track, causing it to be sitting behind for 15(!) minutes.

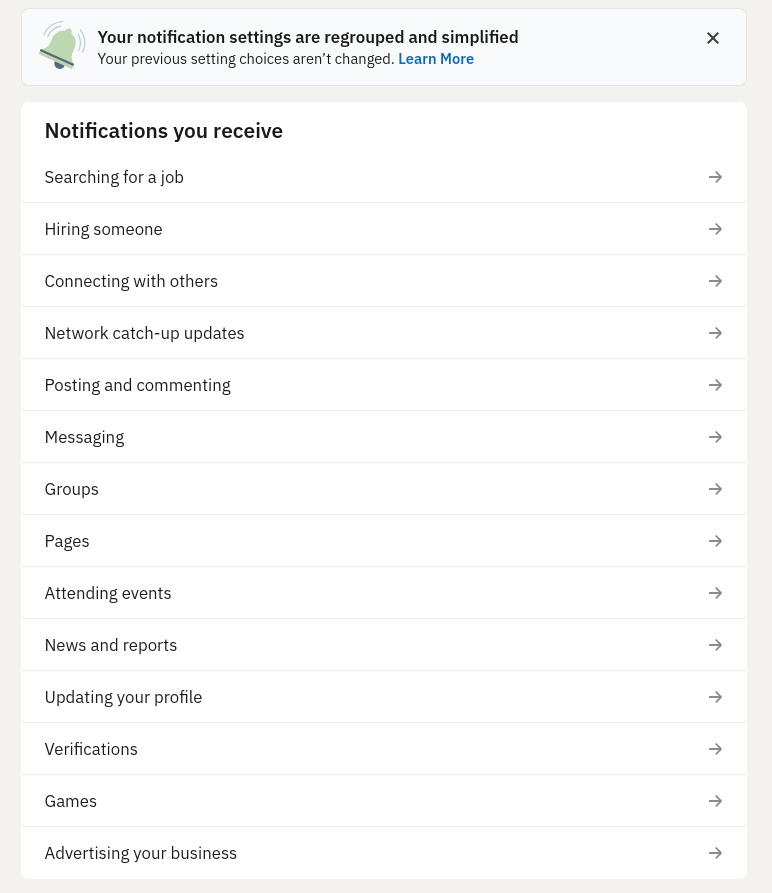

(this image was lying to you, the schedule itself was modified from Frankfurt on)

The DB app I had was very helpful to let us know that because of the delay we will be missing our connection, and offered a simple “choose an alternative” page. Well done DB app! I guess it happened often enough that having its own page is worth the hassle ;)

With all that done, we were told to get off in Fulda, and spend about half an hour there. We will arrive around 40 minutes later than schedule, but that's alright: the hotel was already informed that we would be checking in quite late – another 40 minutes will make no difference.

Fulda is a small town, but has its own interesting Christmas market open. We didn't have time to actually buy something there, but the smell of glühwein was very inviting. Next time!

Arriving in Hamburg

The second train had some minor delays as well, but nothing painful: we arrived in Hamburg a bit past 0:40. Not too bad — the original schedule was 23:55~. Big city!

(I forgot to take a photo at the time, but it was dark and I was tired)

With little patience left for making HVV (the local transport system) accounts or dealing with ticket machines, we decided to just walk to the hotel — should take us around 12 minutes according to Google Maps.

Of course, “misfortune never comes alone” (a Vietnamese idiom), we walked to the wrong branch of the hotel instead! And so another 20 minutes was spent...

We arrived at our correct hotel and got our room at around 1:30. At this time, all I could think of was to shower and sleep.

Day 2: Hamburg Day!

Did I tell you we booked a visit at freaking 9am in advance?

We passed some time on the train to Fulda looking at possible locations to visit. One of them was the Miniatur Wunderland, which (incorrectly, not sure if maliciously) informed us that all visits for Saturday from 10am to 5pm are fully booked. We had two choices: 9am, or night... And nights are for Christmas market, and so we took the 9am tickets, without knowing the incoming hit to our sleep budget...

... and so we were up 7:30am, totalling 5.5 hours in bed. The huge coffee bottle from home proved useful, as it kept me awake for the Miniatur Wunderland visit. We walked a few minutes from the hotel, getting a Day pass from the HVV app on the way. Interesting fact: HVV iOS app requires registration before getting a pass (yuck!) but works perfectly afterwards. The Android app, you can do it as a guest, but then it bought my friend the pass for the next day...

Miniatur Wunderland

Miniatur Wunderland is a permanent exhibition of miniature cities, built to scale with cars, buildings and people. And moving trains! They got various cities around the world: from Swiss cities, Italian places, Monaco race tracks, French farms, Hamburg itself... to the further Norwegian ports, the American landscape, Mexican desserts and even Antartica!

Did I tell you trains run through all of them?

I only took stills, so perhaps a YouTube clip would describe so much better...

Needless to say I was ecstatic about the models. They say it's a total of ~1.2 million man-hours to create the props, and I think it's so much worth it. For the price of 17EUR (student discount applied) I think you're going to have a great time. I highly recommend!

Also, not in the photos: you can see the operator room watching over the model simulation and the train network in real time! How cool is that!!

Another extra: one of the techniques that was used to “simulate” strong waves was to build a non-even surface, project a moving wave on top and synchronizing the ship's movement to the waves moving... Impressive!

Hamburg Kunsthalle

We went for the Kunsthalle (art museum) next, but since it's mostly artworks, I took no photos.

There were two buildings: one for the pre-modern art, and the other for modern exhibitions. Regardless, you get a ticket for both (8EUR on student discount) from the former building with the main entrance.

The first building organizes itself as a story moving from Realism art to Impressionistic, before touching a bit on Surrealism. I'm no art student, so definitely not here to comment. But I found the idea interesting: if Realism's motive was to capture the artist's rendering of nature in its full details, then an Impressionistic wants to do the same thing, but making the viewer filling in the details by drawing impressions instead of the details themselves. Of course, as viewers, we inject details drawing from our common experience, while keeping the “soul” of the image intact through the impressions. A bit of work makes you enjoy it more, I might say. Is Modernism the next step, where the “soul” is even more abstract, allowing us, the viewer, to inject our feelings and thoughts into our own personal rendering of the art? I pondered a little bit... and then moved to the next building.

The second building hosts a few unconnected exhibitions, each on its own floor. Confusing and abstract is the common theme, I would say, as the art ranges from gory pieces of flesh to... something resembling myself playing with MS Paint for the first time. Interestingly, each exhibition gives us a few paragraphs of context on the author and the collection: their upbringing, their interests and their message they hope to convey through the pieces.

Still not so clear about whether I would “get” the exhibitions, but something dawned on me today: yes the pieces by themselves are confusing and abstract, but it seemed that none was meant to be viewed on its own. Taken together, one finds a bit of structure: a common through-line, reaching out from the first impressions of each piece, that hopefully one can take and feed back into our own rendering process.

A bit like osu! mapping: one might find a common theme when you look at a piece both as individual patterns and as a whole, and use that as a recursive process...

Well, I felt a touch smarter leaving the museum than entering.

Food...

We stopped for food in-between. Highlight of the day was definitely the Currywurst I got in one of the Christmas markets:

Soft and tasteful bratwursts against the classic flavor of curry, just the perfect combination. Worth it every time.

Now, for the un-highlight. We decided to have a bit of Korean food for lunch, as our home(?)town lacks its presence. Settled on “Chingu”, we made our order... and found out immediately afterwards that it was not really authentic, and seems to be run by a group of Vietnamese cooks and staff.

I got myself a bowl of Kimchi Jjigae (one of my favorite Korean dishes), while my friend got themselves some Japchae with chicken, and we shared some fried chicken together.

The Jjigae had the ingredients, but lacked the complex taste of pork belly / kimchi rinse in its soup. The end product reminds you of its salt and MSG, which isn't bad by themselves, but they should be elevating the original flavors instead of replacing them...

They just had some wacky sauce for the fried chicken though which turned us away from it as well. Not the best experience. But the yuzu tea was good.

There is another story involving ramen... but that's left for another time!

Internationales Maritimes Museum Hamburg

This one completely blew my mind, despite being a last-minute addition.

From the main station, we needed to take the S4 not-really-subway-but-is-much-underground line, and then walked a bit further. The sun already made most of its way by 4pm, so it was not the most positive walk we had. One interesting note: we walked from Tokiostrasse to Osakaallee, ending up with a bridge across a river (reminding myself a lot of Osaka) before getting to the museum itself.

We arrived just around 4.30pm, when the museum started its almost-closing-time ticket sale of 9EUR. Wonder why so expensive? You're about to find out. First thing we were instructed: please head to the 9th floor and make your way down as you visit the exhibitions. So we headed to the elevator. As the doors open up, we saw in front of our eyes...

Thousands of mini models of ships!!! What an opening. And it just keeps getting better:

And bigger...:

And even bigger...:

And more exotic:

Can you believe, even for captain hats, they manage to find hundreds???

At some point we jokingly said, “well, grab your Legos, you ain't gonna build any of these ships”. What do you see next?

(lego guy for scale in the 2nd photo, the ship is so big...)

But my most favorite thing is that this museum as well has a damn to-scale miniature model of the whole Hamburg port!?!?

(not sure if they have any connections to Miniatur Wunderland... also, damn, this is dream Cities Skylines material...)

My jaws were on the floor the whole time during this 1+ hour visit. I mean, this is no museum, this is just Hamburg flexing on all other harbor cities... To be frank, I think this will be the best maritime museum I will ever see in my life, and there is just zero competition.

Even the train station on the way home gets a model ship.

Home

... and that's all for the day. I'm very pleased! Hamburg's cultural side is perfect so far. We grabbed some Glühwein on our way home, and joined some remote friends for a gaming stream to spend the night.

And then there's me spending hours writing this up. I'm happy to have had this experience, so it's probably worth it to jot it down while it's still fresh.

Until next time! Trains to Copenhagen tomorrow afternoon.